|

jdk9405@umd.edu | jdk9405@gmail.com I am a second-year Ph.D. student in Computer Science at University of Maryland, College Park (UMD), working with Prof. Dinesh Manocha in the GAMMA Lab. At UMD, I've worked on 3D reconstruction and neural rendering.

Previously, I was a research scientist at NAVER LABS. I've worked on visual localization and mapping for robotics.

Email / CV / Google Scholar / |

|

|

I am currently focusing on research in 3D multi-view foundation models. I am also interested in combining classical geometry with modern deep learning methods for advancing 3D vision. |

|

Jaehoon Choi, Dongki Jung, Yonghan Lee, Sungmin Eum, Dinesh Manocha, and Heesung Kwon [arXiv] [Project] We present a method for creating digital twins from real-world environments and facilitating data augmentation for training downstream models embedded in unmanned aerial vehicles (UAVs). |

|

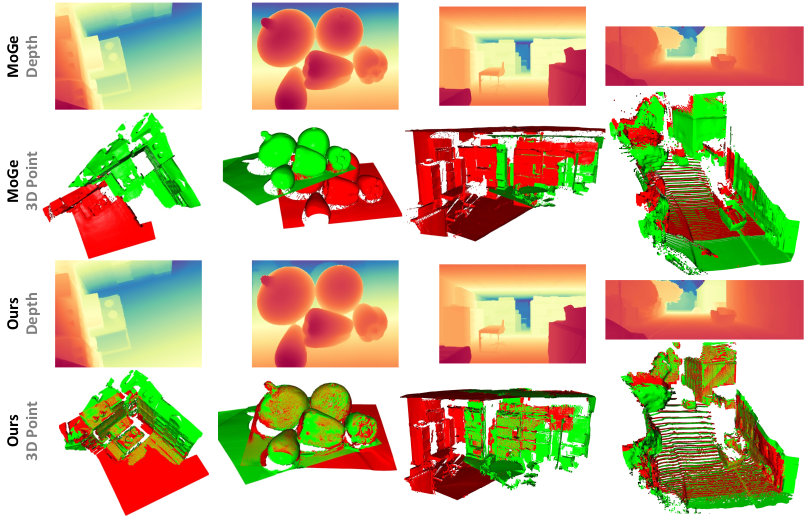

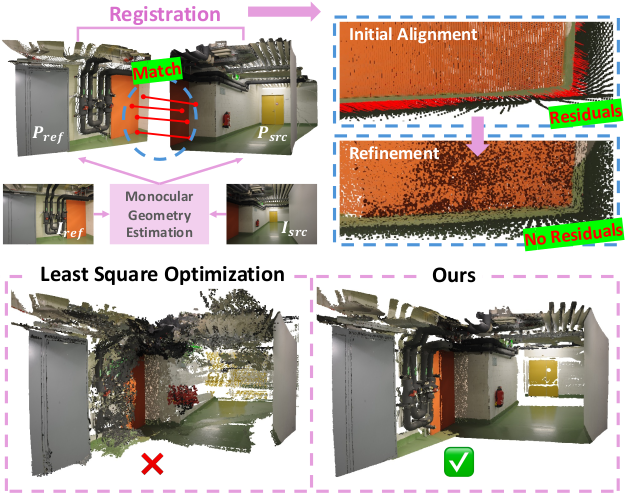

Dongki Jung, Jaehoon Choi, Yonghan Lee, Sungmin Eum, Heesung Kwon, Dinesh Manocha WACV, 2026 We propose MoRe, a training-free Monocular Geometry Refinement method designed to improve cross-view consistency and achieve scale alignment. |

|

Jaehoon Choi, Dongki Jung, Christopher Maxey, Sungmin Eum, Yonghan Lee, Dinesh Manocha, and Heesung Kwon AAAI, 2026 [Project] We introduce UAV4D, a framework for enabling photorealistic rendering for dynamic real-world scenes captured by UAVs. |

|

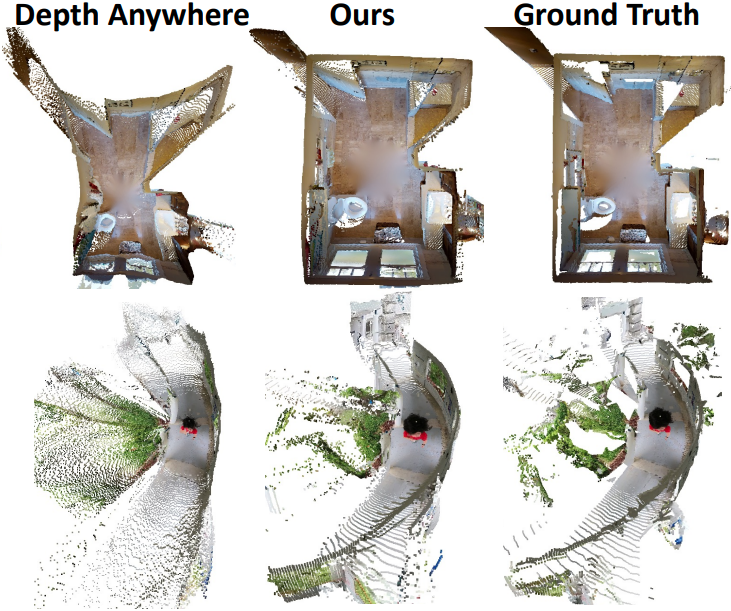

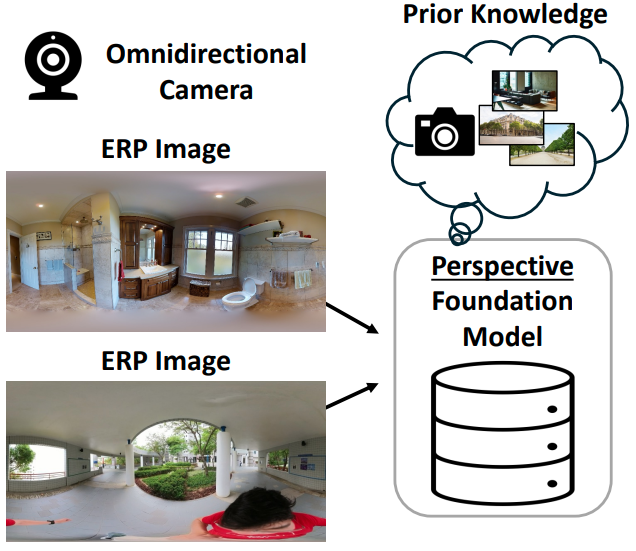

Dongki Jung, Jaehoon Choi, Yonghan Lee, Dinesh Manocha NeurIPS, 2025 [Project] RPG360 leverages the prior knowledge of the perspective foudnation model along with graph optimization, thereby enchances 3D structural awareness and achieves superior performance. |

|

Dongki Jung*, Jaehoon Choi*, Yonghan Lee, Dinesh Manocha ICCV, 2025 [Project] We propose a complete pipeline for indoor mapping using omnidirectional images, consisting of three key stages: (1) Spherical SfM, (2) Neural Surface Reconstruction, and (3) Texture Optimization. |

|

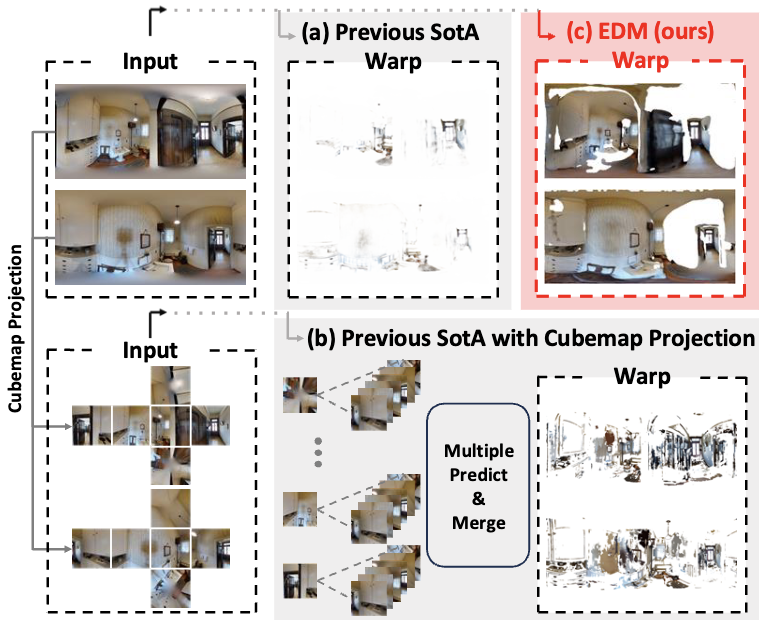

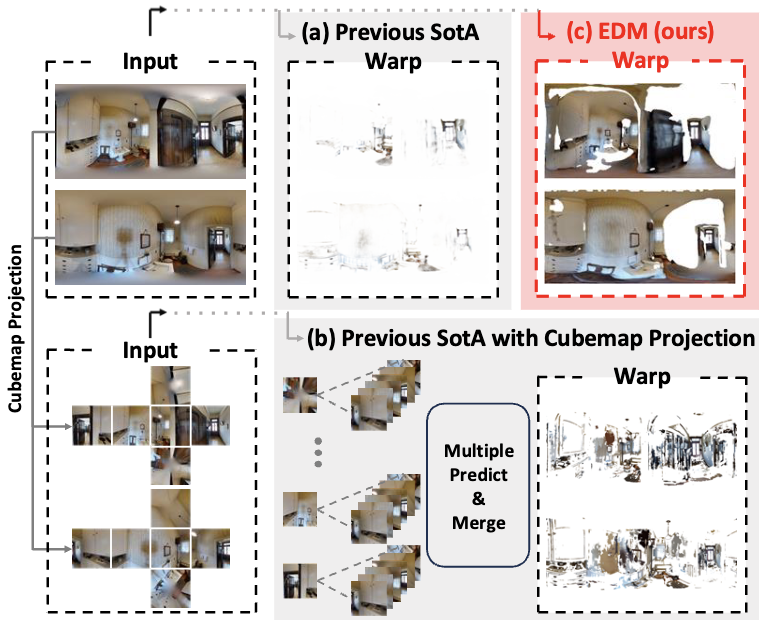

Dongki Jung, Jaehoon Choi, Yonghan Lee, Somi Jeong, Taejae Lee, Dinesh Manocha, Suyong Yeon CVPR, 2025 [Project] We propose the first learning-based dense matching algorithm for omnidirectional images. |

|

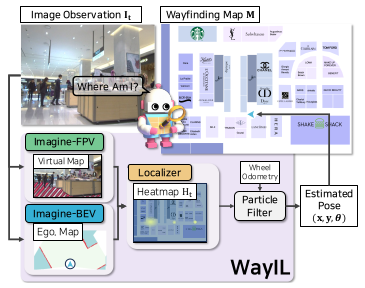

Obin Kwon, Dongki Jung, Youngji Kim, Soohyun Ryu, Suyong Yeon, Songhwai Oh, Donghwan Lee ICRA, 2024 We address robot localization in large-scale indoor environments using wayfinding maps. |

|

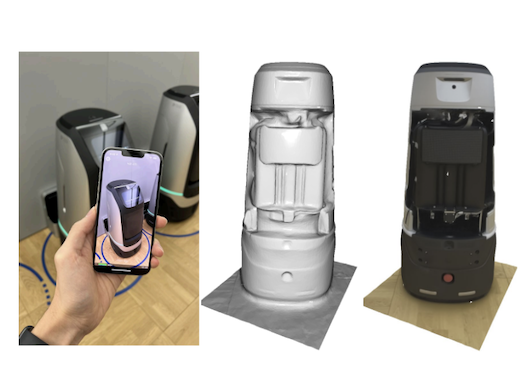

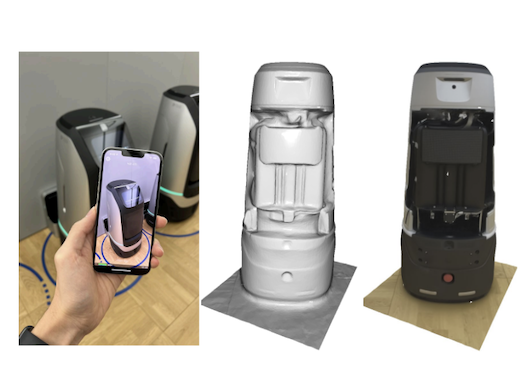

Jaehoon Choi, Dongki Jung, Taejae Lee, Sangwook Kim, Youngdong Jung, Dinesh Manocha, Donghwan Lee CVPR, 2023 [Project] We present a new pipeline for acquiring a textured mesh in the wild with a mobile device. |

|

Jaehoon Choi*, Dongki Jung*, Yonghan Lee, Deokhwa Kim, Dinesh Manocha, Donghwan Lee ICRA, 2022 We have developed a fine-tuning method for metrically accurate depth estimation in a self-supervised way. |

|

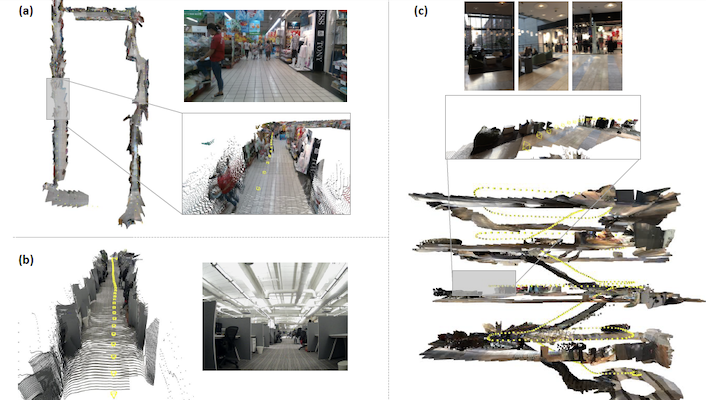

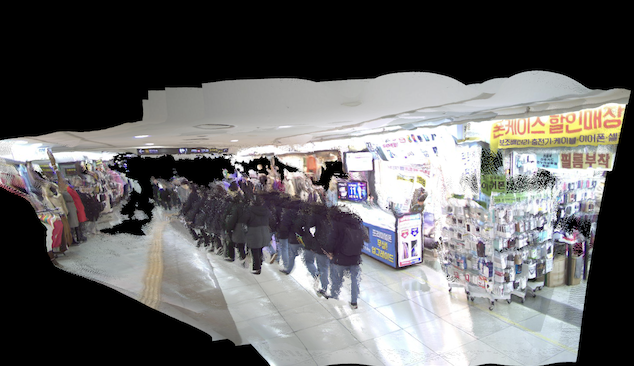

Dongki Jung*, Jaehoon Choi*, Yonghan Lee, Deokhwa Kim, Changick Kim, Dinesh Manocha, Donghwan Lee ICCV, 2021 We present a novel approach for estimating depth from a monocular camera as it moves through complex and crowded indoor environments. |

|

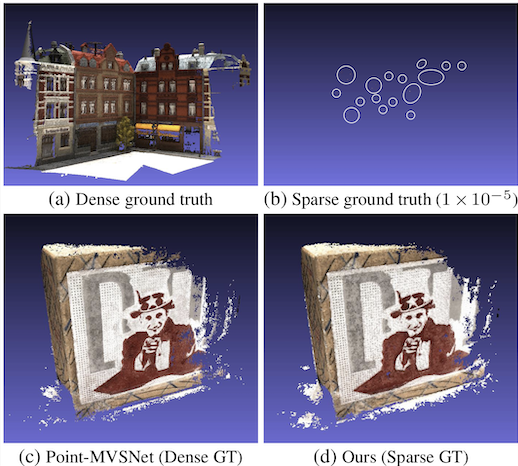

Taekyung Kim, Jaehoon Choi, Seokeon Choi, Dongki Jung, Changick Kim ICCV, 2021 We first introduce a novel semi-supervised multi-view stereo framework. |

|

|

Jaehoon Choi, Dongki Jung, Yonghan Lee, Deokhwa Kim, Dinesh Manocha, Donghwan Lee ICRA, 2021 We present a novel algorithm for self-supervised monocular depth completion in challenging indoor environments. |

|

|

Jaehoon Choi*, Dongki Jung*, Donghwan Lee, Changick Kim NeurIPS Workshop on Machine Learning for Autonomous Driving, 2020 We propose SAFENet that is designed to leverage semantic information to overcome the limitations of the photometric loss. |

|

|

Dongki Jung, Seunghan Yang, Jaehoon Choi, Changick Kim ICIP, 2020 We present a novel learnable normalization technique for style transfer using graph convolutional networks. |

|

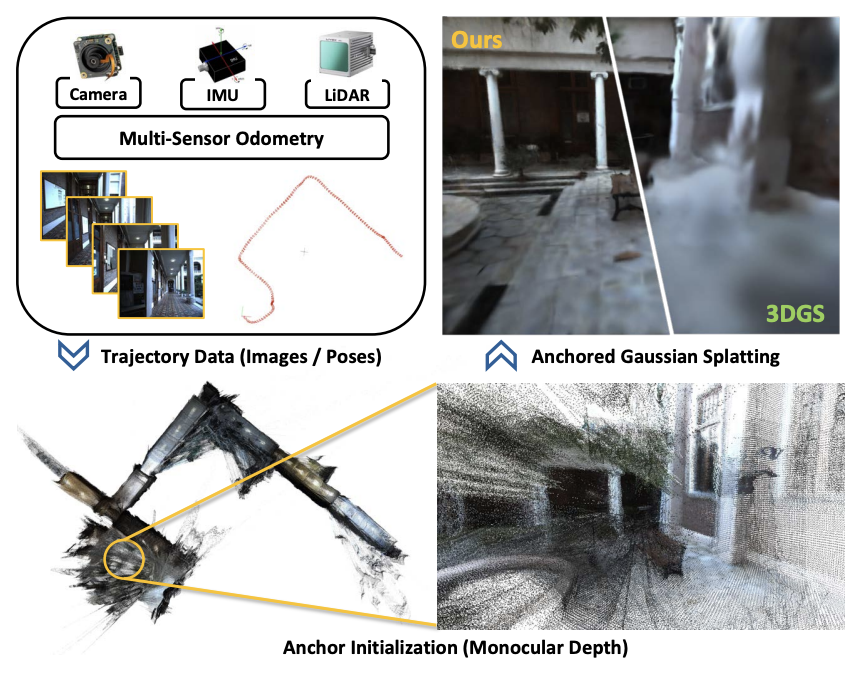

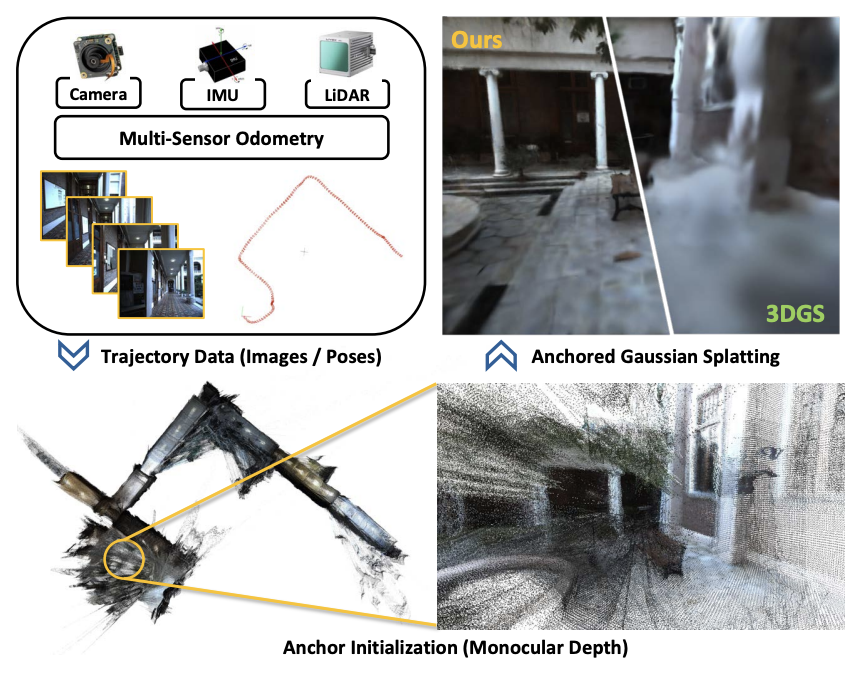

Yonghan Lee, Jaehoon Choi, Dongki Jung, Jaeseong Yun, Soohyun Ryu, Dinesh Manocha, and Suyong Yeon [arXiv], 2024 We propose a novel 3D Gaussian Splatting algorithm that integrates monocular depth network with anchored Gaussian Splatting, enabling robust rendering performance on sparse-view datasets. |

|

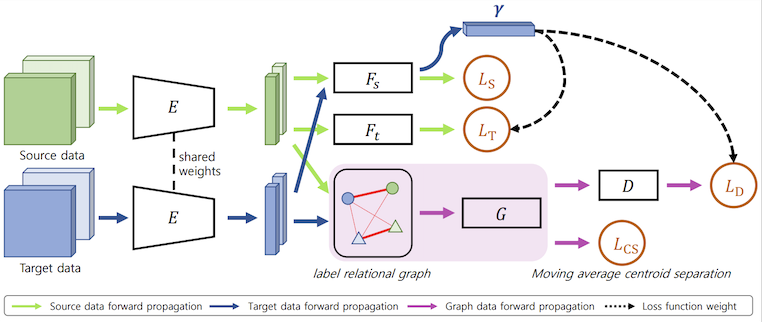

Seunghan Yang, Youngeun Kim, Dongki Jung, Changick Kim arXiv, 2020 We propose a graph partial domain adaptation network, which exploits Graph Convolutional Networks. |

|

|